Humanities

Humanities

Safe at Home

If the hype is to be believed, today’s smart home is your best friend. The TV, refrigerator, thermostat, lights and even the coffeemaker can be controlled with the press of a button on your smartphone. But the much-touted “Internet of Things”—IoT for short—raises a challenging question: Can smart homes provide a wealth of conveniences and also protect privacy?

Because this technology requires household items to be connected to each other and to the Internet, hackers have a potential weakness they can exploit to steal your personal information, bank account logins, credit card numbers and more.

Students at the UO explored this potential drawback in a partnership between philosophy and computer science that professors Colin Koopman and Jun Li

recently developed.

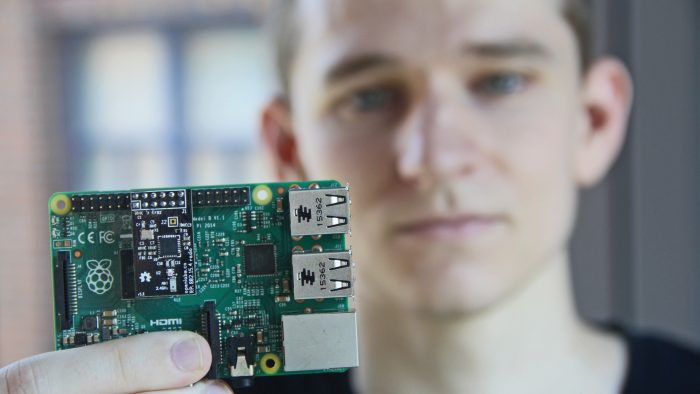

As a project for the philosophy department and the Center for Cyber Security and Privacy, philosophy student Robert Stanton (above) and two students in computer science built a small working model with the same fundamental characteristics as a smart home: a computer connected to devices that collect and transmit data, all of which are connected to the Internet. Their system included a laptop wired to smaller, handheld computers, which in turn were wired to small sensors that can gather and send information such as room temperature.

The question, Stanton said, was whether this “smart” system could run tasks without compromising the user’s ability to keep the information private.

But privacy is an abstract concept—it’s hard to define exactly what it includes. This is expecially difficult in an IoT environment, so a philosophical examination of privacy was nessary before Li’s computer science students could design the system. Thus the collaboration with Koopman, an associate professor of philosophy.

Stanton, under Koopman’s guidance, developed concrete expectations for the experiment based on his consideration of privacy from multiple perspectives. He explored theories that define privacy as secrecy or, alternatively, the sharing of information within specific social contexts—that is, you might tell your neighbor about a medical concern but would consider your privacy violated if your doctor shared this information with a neighbor.

Neither theory could be easily adapted for the experiment, so Stanton chose a definition of privacy by Alan Westin, professor emeritus of public law and government at Columbia University.

Westin defined privacy as “the claim of individuals to determine for themselves when, how and to what extent information about them is communicated,” Stanton said. From that, he developed concrete privacy goals for the experiment: Is the user able to turn all devices on and off remotely? Can the user monitor all data sent across the network?

Working through the details of the experiment with students from computer science, Stanton said, was a “frustrating but rewarding” exercise in communicating across disciplines.

“When the computer science students were talking about writing code and how the devices were connected

to do their tasks, there was a lot of technical language which they had to demystify for me,” Stanton said. “At the same time, I had to explain a lot of philosophical concepts in a common-sense sort of way.”

The team found, indeed, that their system could be programmed to meet Stanton’s criteria for privacy. Through the laptop, the user could monitor temperature readings and other data in real time and could disable all sensors with the press of a button.

But the investigation also illuminated complications that face the industry.

Most IoT networks involve a third party—in addition to the maker of the product and the consumer who buys it, another group typically runs the Internet servers through which information flows. That’s another possible entry point for access to the user’s data.

“We got around this issue by using a private server, but it’s unlikely that most consumers will have that option,” Stanton said. “One solution would be to have stricter policies around what the third party can do with the data. But that’s a question for the industry.”

—Matt Cooper

Twitter

Twitter Facebook

Facebook Forward

Forward